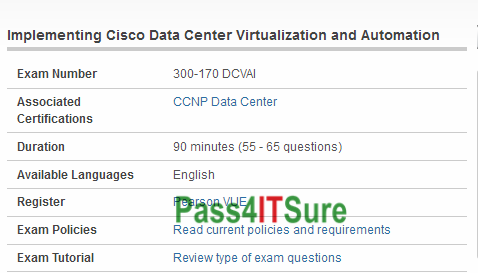

Which CCNP Data Center exams are the most appropriate to prepare first 300-170 dumps? The DCVAI Implementing Cisco Data Center Virtualization and Automation (DCVAI) (300-170 DCVAI) exam is a 90 minutes (55 – 65 questions) assessment in pass4itsure that is associated with the CCNP Data Center certification. Quick study for best quality Cisco CCNP Data Center 300-170 dumps exam with high quality 300-170 dumps video study. “DCVAI Implementing Cisco Data Center Virtualization and Automation (DCVAI)” is the exam name of Pass4itsure Cisco 300-170 dumps test which designed to help candidates prepare for and pass the Cisco 300-170 exam. If you are in a career with Cisco and want to excel at a fast rate without the hassle of going through a lot of books and manuals then the only answer is to download our https://www.pass4itsure.com/300-170.html dumps Implementing Cisco Data Center Virtualization and Automation exam practice material.

[2018 Cisco 300-170 Dumps Video From Google Drive]: https://drive.google.com/open?id=0BwxjZr-ZDwwWZzkydEcwa19TQWM

[2018 Lpi 300-165 Dumps Video From Google Drive]: https://drive.google.com/open?id=0BwxjZr-ZDwwWeUJYS1plcHg0T3M

Following Cisco 300-170 Dumps 102QAs Are All New Published By Pass4itsure

QUESTION 1.Which one of the following choices lists the four steps of interaction with a database?

A.Connect, Read, Write, Disconnect

B.Connect, Send a command, Write, Disconnect

C.Connect, Query, Read/Write, Disconnect

D.Connect, Send a command, Display results, Disconnect

300-170 exam Correct:D

QUESTION 2.Which one of the following variables is used if no variable was specified in a pattern match, substitution operator or print statement?

A.$nul

B.$#

C.$_

D.$*

Correct:C

QUESTION 3.Which choice demonstrates the correct syntax for the DELETE command?

A.DELETE MyDatabase WHERE VALUES state=Kentucky AND color=blue

B.DELETE MyDatabase WHERE state=Kentucky AND color=blue

C.DELETE FROM MyDatabase WHERE state=Kentucky AND color=blue

D.DELETE state=Kentucky AND color=blue FROM MyDatabase

300-170 dumps Correct:C

QUESTION 4.Consider the following HTML code: < INPUT TYPE=text NAME=state VALUE=>> Given this code, which one of the following choices best describes how the data should be written to a file?

A.print OUTPUT, “state” . param(“State: “);

B.print OUTPUT “State: ” . param(“state”);

C.print OUTPUT, “State: ” . (“state”);

D.OUTPUT “state” . param(“State: “);

Correct:B

QUESTION 5.Which choice best demonstrates how the print statement may be used to print HTML code?

A.print HTML>>;

B.print ;

C.print (“HTML”);

D.print (“”);

300-170 pdf Correct:D

QUESTION 6.Consider the following code: open(INFILE, “myfile”); Given this code, which one of the following choices demonstrates reading in scalar context?

A.$file = INFILE>;

B.$file < INFILE>;

C.%file = INFILE>;

D.@file <= INFILE>;

Correct:A

QUESTION 7.Consider the following code: open( INPUT, “Chapter1”); Given this code, which one of the following choices demonstrates reading in list context?

A.%file = INPUT ;

B.@file = INPUT ;

C.@%file < INPUT ;

D.$file = INPUT ;

300-170 vce Correct:B

QUESTION 8.Which set of operators is used to read and write to a file in random-access mode?

A.< >

B.< >>

C.+< +>

D.-<< ->>

Correct:C

QUESTION 9.The do method duplicates the function of which of the following methods?

A.param and execute

B.post and prepare

C.prepare and execute

D.post and execute

300-170 exam Correct:C

QUESTION 10.Which one of the following statements allows for variable substitution?

A.$sql=qq{SELECT * FROM MyDatabase WHERE state=$state};

B.$sql=q{SELECT * FROM MyDatabase WHERE state=$state};

C.$sql=q{SELECT * FROM MyDatabase WHERE state=$state};

D.$sql=qq{SELECT * FROM MyDatabase WHERE state=$state};

Correct:A

QUESTION 11.Antonio is naming a Perl variable. Which choice includes characters he may use?

A._, &, *

B.+, #,

$ C.$,

@, % D.%,

#, @

300-170 dumps Correct:C

QUESTION 12.Which choice best describes how to access individual elements of an array?

A.Use an index starting with 1

B.Use an index starting with 0

C.Use a pointer

D.Use a key value

Correct:B

QUESTION 13.Before allowing a user to submit data with a Web form, which of the following tasks should be performed?

A.The data should be validated by the script.

B.The data should be validated by the programmer.

C.The data should be compared to valid data stored in a list.

D.The data should be passed through the validate() method.

300-170 pdf Correct:A

QUESTION 14.The CGI.pm module can be used to perform which one of the following tasks?

A.GET or POST data

B.Load external variables

C.Read large amounts of text into the script

D.Access environment variables

Correct:D

QUESTION 15.What is the main danger in using cookies and hidden fields?

A.They can be deleted.

B.They can be edited.

C.They can be blocked by the browser.

D.They can be viewed.

300-170 vce Correct:B

QUESTION 16.The start_html method of CGI.pm yields which one of the following results?

A.”content-type:A\n\n”

B. HTML

C. FORM METHOD=A ACTION=B ENCODING=C

D. NPUT TYPE=”submit” VALUE=A

Correct:B

QUESTION 17.Which method is used in a Perl script to access the variables POSTed by an HTML form?

A.prepare();

B.param();

C.header ();

D.post();

300-170 exam Correct:B

QUESTION 18.The file mode specifies which one of the following?

A.The access permissions

B.The inode number

C.The file’s owner

D.How the file is opened

Correct:D

QUESTION 19.List context versus scalar context is determined by which one of the following?

A.The compiler

B.The debugger

C.The interpreter

D.The environment

300-170 dumps Correct:C

QUESTION 20.Which one of the following choices best describes data tainting?

A.Tainted data can be used by eval, system, or exec.

B.Tainted data cannot be accessed by the script.

C.Variables containing external data cannot be used outside the script.

D.Data tainting is enabled using the D switch.

Correct:C

QUESTION 21

When is the earliest point at which the reduce method of a given Reducer can be called?

A. As soon as at least one mapper has finished processing its input split.

B. As soon as a mapper has emitted at least one record.

C. Not until all mappers have finished processing all records.

D. It depends on the InputFormat used for the job.

300-170 pdf Correct Answer: C

QUESTION 22

Which describes how a client reads a file from HDFS?

A. The client queries the NameNode for the block location(s).The NameNode returns the block location(s) to the client. The client reads the data directory off the DataNode(s).

B. The client queries all DataNodes in parallel. The DataNode that contains the requested data responds

directly to the client. The client reads the data directly off the DataNode.

C. The client contacts the NameNode for the block location(s).The NameNode then queries the DataNodes for block locations. The DataNodes respond to the NameNode, and the NameNode redirects the client to the DataNode that holds the requested data block(s).The client then reads the data directly off the DataNode.

D. The client contacts the NameNode for the block location(s).The NameNode contacts the DataNode that holds the requested data block. Data is transferred from the DataNode to the NameNode, and then from the NameNode to the client.

Correct Answer: C

QUESTION 23

You are developing a combiner that takes as input Text keys, IntWritable values, and emits Text keys, IntWritable values. Which interface should your class implement?

A. Combiner <Text, IntWritable, Text, IntWritable>

B. Mapper <Text, IntWritable, Text, IntWritable>

C. Reducer <Text, Text, IntWritable, IntWritable>

D. Reducer <Text, IntWritable, Text, IntWritable>

E. Combiner <Text, Text, IntWritable, IntWritable>

300-170 vce Correct Answer: D

QUESTION 24

Indentify the utility that allows you to create and run MapReduce jobs with any executable or script as the mapper and/or the reducer?

A. Oozie

B. Sqoop

C. Flume

D. Hadoop Streaming

E. mapred

Correct Answer: D

QUESTION 25

How are keys and values presented and passed to the reducers during a standard sort and shuffle phase of MapReduce?

A. Keys are presented to reducer in sorted order; values for a given key are not sorted.

B. Keys are presented to reducer in sorted order; values for a given key are sorted in ascending order.

C. Keys are presented to a reducer in random order; values for a given key are not sorted.

D. Keys are presented to a reducer in random order; values for a given key are sorted in ascending order.

300-170 exam Correct Answer: A

QUESTION 26

Assuming default settings, which best describes the order of data provided to a reducer s reduce method:

A. The keys given to a reducer aren t in a predictable order, but the values associated with those keys always are.

B. Both the keys and values passed to a reducer always appear in sorted order.

C. Neither keys nor values are in any predictable order.

D. The keys given to a reducer are in sorted order but the values associated with each key are in no predictable order

Correct Answer: D

QUESTION 27

You wrote a map function that throws a runtime exception when it encounters a control character in input data. The input supplied to your mapper contains twelve such characters totals, spread across five file splits. The first four file splits each have two control characters and the last split has four control characters. Indentify the number of failed task attempts you can expect when you run the job with mapred.max.map.attempts set to 4:

A. You will have forty-eight failed task attempts

B. You will have seventeen failed task attempts

C. You will have five failed task attempts

D. You will have twelve failed task attempts

E. You will have twenty failed task attempts

300-170 dumps Correct Answer: E

QUESTION 28

You want to populate an associative array in order to perform a map-side join. You ?v decided to put this information in a text file, place that file into the Distributed Cache and read it in your Mapper before any records are processed. Indentify which method in the Mapper you should use to implement code for reading the file and populating the associative array?

A. combine

B. map

C. init

D. configure

Correct Answer: D

QUESTION 29

You ve written a MapReduce job that will process 500 million input records and generated 500 million key value pairs. The data is not uniformly distributed. Your MapReduce job will create a significant amount of intermediate data that it needs to transfer between mappers and reduces which is a potential bottleneck.

A custom implementation of which interface is most likely to reduce the amount of intermediate data transferred across the network?

A. Partitioner

B. OutputFormat

C. WritableComparable

D. Writable

E. InputFormat

F. Combiner

300-170 pdf Correct Answer: F

QUESTION 30

Can you use MapReduce to perform a relational join on two large tables sharing a key? Assume that the two tables are formatted as comma-separated files in HDFS.

A. Yes.

B. Yes, but only if one of the tables fits into memory

C. Yes, so long as both tables fit into memory.

D. No, MapReduce cannot perform relational operations.

E. No, but it can be done with either Pig or Hive.

Correct Answer: A

QUESTION 31

You have just executed a MapReduce job. Where is intermediate data written to after being emitted from the Mapper s map method?

A. Intermediate data in streamed across the network from Mapper to the Reduce and is never written to disk.

B. Into in-memory buffers on the TaskTracker node running the Mapper that spill over and are written into HDFS.

C. Into in-memory buffers that spill over to the local file system of the TaskTracker node running the Mapper.

D. Into in-memory buffers that spill over to the local file system (outside HDFS) of the TaskTracker node running the Reducer

E. Into in-memory buffers on the TaskTracker node running the Reducer that spill over and are written into HDFS.

300-170 vce Correct Answer: D

QUESTION 32

You want to understand more about how users browse your public website, such as which pages they visit prior to placing an order. You have a farm of 200 web servers hosting your website. How will you gather this data for your analysis?

A. Ingest the server web logs into HDFS using Flume.

B. Write a MapReduce job, with the web servers for mappers, and the Hadoop cluster nodes for reduces.

C. Import all users clicks from your OLTP databases into Hadoop, using Sqoop.

D. Channel these clickstreams inot Hadoop using Hadoop Streaming.

E. Sample the weblogs from the web servers, copying them into Hadoop using curl.

Correct Answer: B

QUESTION 33

MapReduce v2 (MRv2/YARN) is designed to address which two issues?

A. Single point of failure in the NameNode.

B. Resource pressure on the JobTracker.

C. HDFS latency.

D. Ability to run frameworks other than MapReduce, such as MPI.

E. Reduce complexity of the MapReduce APIs.

F. Standardize on a single MapReduce API.

300-170 exam Correct Answer: BD

QUESTION 34

You need to run the same job many times with minor variations. Rather than hardcoding all job configuration options in your drive code, you ve decided to have your Driver subclass org.apache.hadoop.conf.Configured and implement the org.apache.hadoop.util.Tool interface. Indentify which invocation correctly passes.mapred.job.name with a value of Example to Hadoop?

A. hadoop mapred.job.name=Example MyDriver input output

B. hadoop MyDriver mapred.job.name=Example input output

C. hadoop MyDrive D mapred.job.name=Example input output

D. hadoop setproperty mapred.job.name=Example MyDriver input output

E. hadoop setproperty ( mapred.job.name=Example ) MyDriver input output

Correct Answer: C

The challenge of clearing Cisco “300-170” exam dumps has been made easy with our tested and world renowned practice material. “DCVAI Implementing Cisco Data Center Virtualization and Automation (DCVAI)”, also known as 300-170 exam, is a Cisco certification which covers all the knowledge points of the real Cisco exam. It helps even a fresher get certified in the first attempt without registering with online sites preparing for such exams. Pass4itsure Cisco 300-170 dumps exam questions answers are updated (102 Q&As) are verified by experts. The associated certifications of 300-170 dumps is CCNP Data Center. Our simple-to-use “300-170 dumps” has been carefully prepared by our team of experts to get through any exam of https://www.pass4itsure.com/300-170.html dumps Cisco Certified Network Professional Data Center quickly and easily.